Search Docs by Keyword

VSCode Remote Development via SSH and Tunnel

This document provides the necessary steps needed to setup a remote connection between your local VS Code and the Cannon cluster using two approaches: SSH and Tunnel. These options could be used to carry out the remote development work on the cluster using VS Code with seamless integration of your local environment and cluster resources.

Important:

We encourage all our users to utilize the Open On Demand (OOD) web interface of the cluster to launch VS Code when remote development work is not required. The instructions to launch VS Code using the Remote Desktop App are given here.

Prerequisites

- Recent version of VS Code installed for your local machine

- Remote Explorer and Remote SSH extensions installed, if not already present by default

FASRC Recommendation

Based on our internal evaluation of the three approaches, mentioned below, and the interaction with our user community, to launch VSCode on a compute node, we recommend our users utilize Approach I: Remote – Tunnel via batch job over the other two. The Remote – Tunnel via batch job approach submits a batch job to the scheduler on the cluster, thereby providing resilience toward network glitches that could disrupt VSCode session on a compute node if launched using Approach II or III.

Note: We limit our users to a maximum of 5 login sessions, so be aware of the number of VSCode instances you spawn on the cluster.

Approach I: Remote – Tunnel via batch job

Note: The method described here and in Approach II will launch a single VS Code session at a time for a user on the cluster. The Remote – Tunnel approaches do not support concurrent sessions on the cluster for a user.

In order to establish a remote tunnel between your local machine and that of the cluster, as an sbatch job, execute the following steps.

- Copy the

vscode.jobscript:#!/bin/bash #SBATCH -p test # partition. Remember to change to a desired partition #SBATCH --mem=4g # memory in GB #SBATCH --time=04:00:00 # time in HH:MM:SS #SBATCH -c 1 # number of cores set -o errexit -o nounset -o pipefail MY_SCRATCH=$(TMPDIR=/scratch mktemp -d) echo $MY_SCRATCH #Obtain the tarball and untar it in $MY_SCRATCH location to obtain the #executable, code, and run it using the provider of your choice curl -Lk 'https://code.visualstudio.com/sha/download?build=stable&os=cli-alpine-x64' | tar -C $MY_SCRATCH -xzf - #VSCODE_CLI_DISABLE_KEYCHAIN_ENCRYPT=1 $MY_SCRATCH/code tunnel user login --provider github VSCODE_CLI_DISABLE_KEYCHAIN_ENCRYPT=1 $MY_SCRATCH/code tunnel user login --provider microsoft #Accept the license terms & launch the tunnel $MY_SCRATCH/code tunnel --accept-server-license-terms --name cannontunnel

vscode.jobscript uses themicrosoftprovider authentication using HarvardKey. If you would like to change the authentication method togithub, substitutemicrosoft->githuband make changes to thevscode.jobscript accordingly. -

Submit the job from a private location (somewhere that only you have access to, for example your

$HOMEdirectory) from which others can’t see the log file$ sbatch vscode.job

- Look at the end of the output file

$ tail -f slurm-32579761.out ... To sign in, use a web browser to open the page https://microsoft.com/devicelogin and enter the code ABCDEFGH to authenticate. Open a web browser, enter the URL, and the code. After authentication, wait a few seconds to a minute, and print the output file again: $ tail slurm-32579761.out * * Visual Studio Code Server * * By using the software, you agree to * the Visual Studio Code Server License Terms (https://aka.ms/vscode-server-license) and * the Microsoft Privacy Statement (https://privacy.microsoft.com/en-US/privacystatement). * Open this link in your browser https://vscode.dev/tunnel/cannon/n/home01/jharvard/vscode

- Now, you have two options

- Use a web client by opening vscode.dev link from the output above on a web browser.

- Use vscode local client — see below

Using vscode local client (option #2)

- In your local vscode (in your own laptop/desktop), add the Remote Tunnel extension (ms-vscode.remote-server)

- On the local VSCode, install Remote Tunnel extension

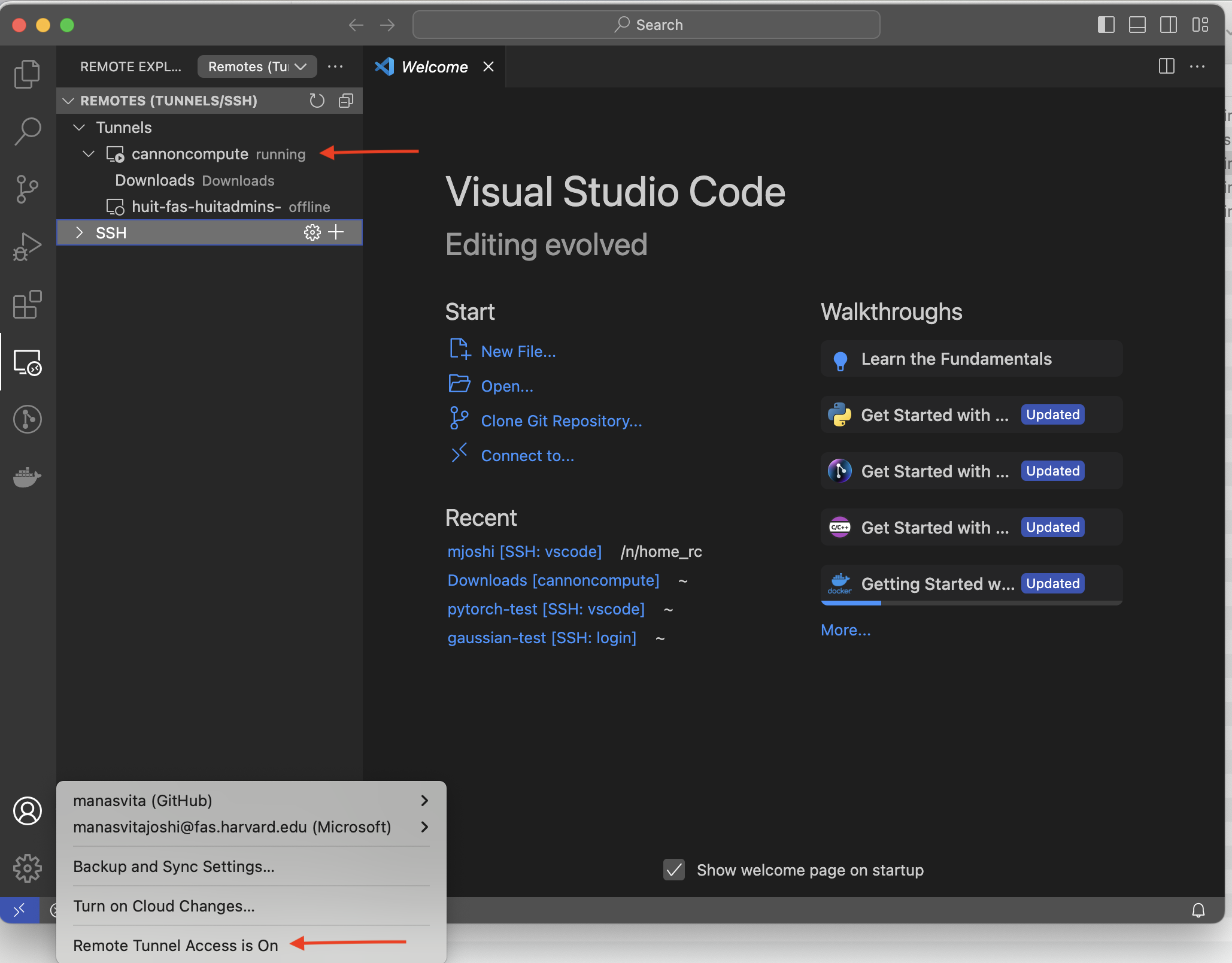

- Click on VS Code Account menu, choose “

Turn on Remote Tunnel Access”

- Connect to the cluster:

- Then choose the authentication method that you used in

vscode.job, microsoft or github

- Click on the

Remote Explorericon and pull up theRemote Tunneldrop-down menu - Click on

cannontunnelto get connected to the remote machine either in the same VS Code window (indicated by ->) or a new one (icon besides ->).

Prior to clicking, make sure you see:Remote -> Tunnels -> cannontunnel running - Finally, when you get vscode connected, you can also open a terminal on vscode that will be running on the compute node where your submitted job is running.

Enjoy your work using your local VSCode on the compute node.

Approach II: Remote – Tunnel interactive

In order to establish a remote tunnel between your local machine and that of the cluster, as an interactive job, execute the following steps. Remember to replace <username> with your FASRC username.

-

ssh <username>@login.rc.fas.harvard.edu -

curl -Lk 'https://code.visualstudio.com/sha/download?build=stable&os=cli-alpine-x64' --output vscode_cli.tar.gz -

tar -xzf vscode_cli.tar.gz - An executable,

code, will be generated in your current working directory. Either keep it in your$HOMEor move it to yourLABSfolder, e.g.mv code /n/holylabs/rc_admin/Everyone/

- Add the path to your

~/.bashrcso that the executable is always available to you regardless of the node you are on, e.g.,export PATH=/n/holylabs/rc_admin/Everyone:$PATH

- Save

~/.bashrc, and on the terminal prompt, execute the command:source ~/.bashrc - Go to a compute node, e.g.:

salloc -p gpu_test --gpus 1 --mem 10000 -t 0-01:00 - Execute the command:

code tunnel - Follow the instructions on the screen and log in using either your Github or Microsoft account, e.g.:

Github Account - To grant access to the server, open the URL https://github.com/login/device and copy-paste the code given on the screen

- Name the machine, e.g.:

cannoncompute - Open the link that appears in your local browser and follow the authentication process as mentioned in steps# 3 & 4 of https://code.visualstudio.com/docs/remote/tunnels#_using-the-code-cli

- Once the authentication is complete, you can either open the link that appears on the screen on your local browser and run VS Code from there or launch it locally as mentioned below.

- On the local VSCode, install Remote Tunnel extension

- Click on VS Code Account menu, choose “

Turn on Remote Tunnel Access” - Click on

cannoncomputeto get connected to the remote machine either in the same VS Code window (indicated by ->) or a new one (icon besides ->). Prior to clicking, make sure you see:

Remote -> Tunnels -> cannoncompute running

Note: Every time you access a compute node, the executable, code, will be in your path. However, you will have to repeat step#10 before executing step#16 above in order to start a fresh tunnel.

Approach III: Remote – SSH

In order to connect remotely to the cluster using VS Code, you need to edit the SSH configuration file on your local machine.

- For Mac OS and Linux users, the file is located at

~/.ssh/config. If it’s not there, then create a file with that name. - For Windows users, the file is located at

C:\Users\<username>\.ssh\config. Here,<username>refers to your local username on the machine. Same as above, if the file is not present, then create one.

There are two ways to get connected to the cluster remotely:

- Connect to the login node using VS Code.

Important: This connection must be used for writing &/or editing your code only. Please do not use this connection to run Jupyter notebook or any other script directly on the login node. - Connect to the compute node using VS Code.

Important: This connection can be used for running notebooks and scripts directly on the compute node. Avoid using this connection for writing &/or editing your code as this is a non-compute work, which can be carried out from the login node.

SSH configuration file

Login Node

Adding the following to your SSH configuration file will let you connect to the login node of the cluster only with the Single Sign-On option enabled. The name of the Host here is chosen to be cannon but you can name it to whatever you like, e.g., login or something else. In what follows, replace <username> with your FASRC username.

For Mac:

Host cannon

User <username>

HostName login.rc.fas.harvard.edu

ControlMaster auto

ControlPath ~/.ssh/%r@%h:%p

For Windows:

The SSH ControlMaster option for single sign-on is not supported for Windows. Hence, Windows users can only establish a connection to the login node by either disabling the ControlMaster option or not having that at all in the SSH configuration file, as shown below:

Host cannon

User <username>

HostName login.rc.fas.harvard.edu

ControlMaster no

ControlPath none

or

Host cannon

User <username>

HostName login.rc.fas.harvard.edu

Compute Node

In order to connect to the compute node of the cluster directly, execute the following two steps on your local machine:

Note: Establishing a remote SSH connection to a compute node via VSCode works only for Mac OS. For Windows users, this option is not supported and we recommend they utilize the Remote-Tunnel Approaches I or II for launching VSCode on a compute node.

- Generate a pair of public and private SSH keys for your local machine, if you have not done so previously, and add the public key to the login node of the cluster:

In the~/.sshfolder of your local machine, see ifid_ed25519.pubis present. If not, then generate private and public keys using the command:ssh-keygen -t ed25519 -b 4096Then submit the public key to the cluster using the following command:

ssh-copy-id -i ~/.ssh/id_ed25519.pub <username>@login.rc.fas.harvard.eduThis will append your local public key to

~/.ssh/authorized_keysin your home directory ($HOME) on the cluster so that your local machine is recognized. - Add the following to your local

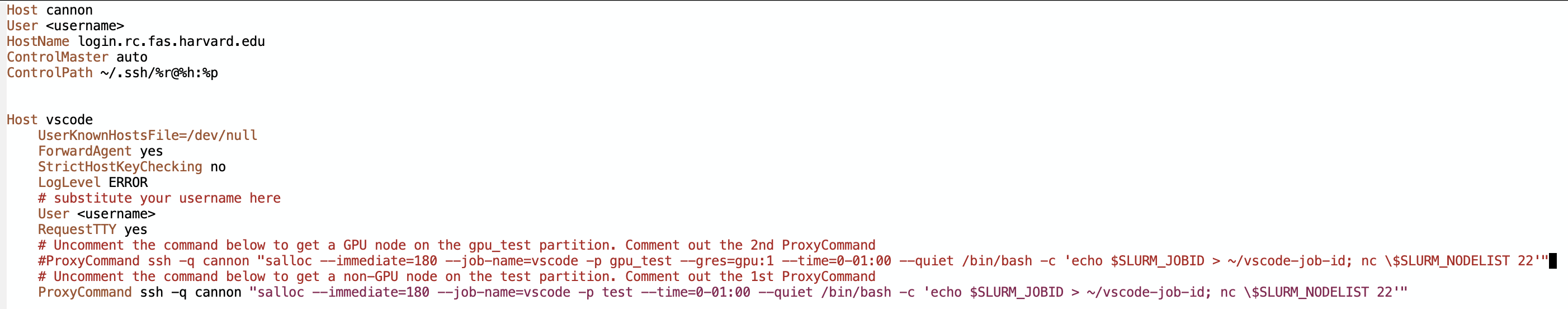

~/.ssh/configfile by replacing<username>with your FASRC username. Make sure that the portion for connecting to the login node from above is also present in your SSH configuration file. You can edit the name of theHostto whatever you like or keep it ascompute. There are two ProxyCommand examples shown here to demonstrate how theProxyCommandcan be used to launch a job on a compute node of the cluster with a desired configuration of resources through thesalloccommand. Uncommenting the first one will launch a job on thegpu_testpartition of the Cannon cluster whereas uncommenting the second one will launch it on thetestpartition.

Host compute

UserKnownHostsFile=/dev/null

ForwardAgent yes

StrictHostKeyChecking no

LogLevel ERROR

# substitute your username here

User <username>

RequestTTY yes

# Uncomment the command below to get a GPU node on the gpu_test partition. Comment out the 2nd ProxyCommand

#ProxyCommand ssh -q cannon "salloc --immediate=180 --job-name=vscode --partition gpu_test --gres=gpu:1 --time=0-01:00 --mem=4GB --quiet /bin/bash -c 'echo $SLURM_JOBID > ~/vscode-job-id; nc \$SLURM_NODELIST 22'"

# Uncomment the command below to get a non-GPU node on the test partition. Comment out the 1st ProxyCommand

ProxyCommand ssh -q cannon "salloc --immediate=180 --job-name=vscode --partition test --time=0-01:00 --mem=4GB --quiet /bin/bash -c 'echo $SLURM_JOBID > ~/vscode-job-id; nc \$SLURM_NODELIST 22'"

Note: Remember to change the Slurm directives, such as --mem, --time, --partition, etc., in the salloc command based on your workflow and how you are planning to use the VSCode session on the cluster. For example, if the program you are trying to run needs more memory, then it is best to request that much amount of memory using the --mem flag in the salloc command prior to launching the VSCode session on the cluster otherwise it could result in Out Of Memory error.

Important: Make sure to pass the name of Host being used for the login node to the ProxyCommand for connecting to a compute node. For example, here, we have named the Host as cannon for connecting to the login node. The same name, cannon is then being passed to the Proxycommand to establish connection to a compute node via ssh. Passing any other name to Proxycommand ssh -q would result in a connection not being established error.

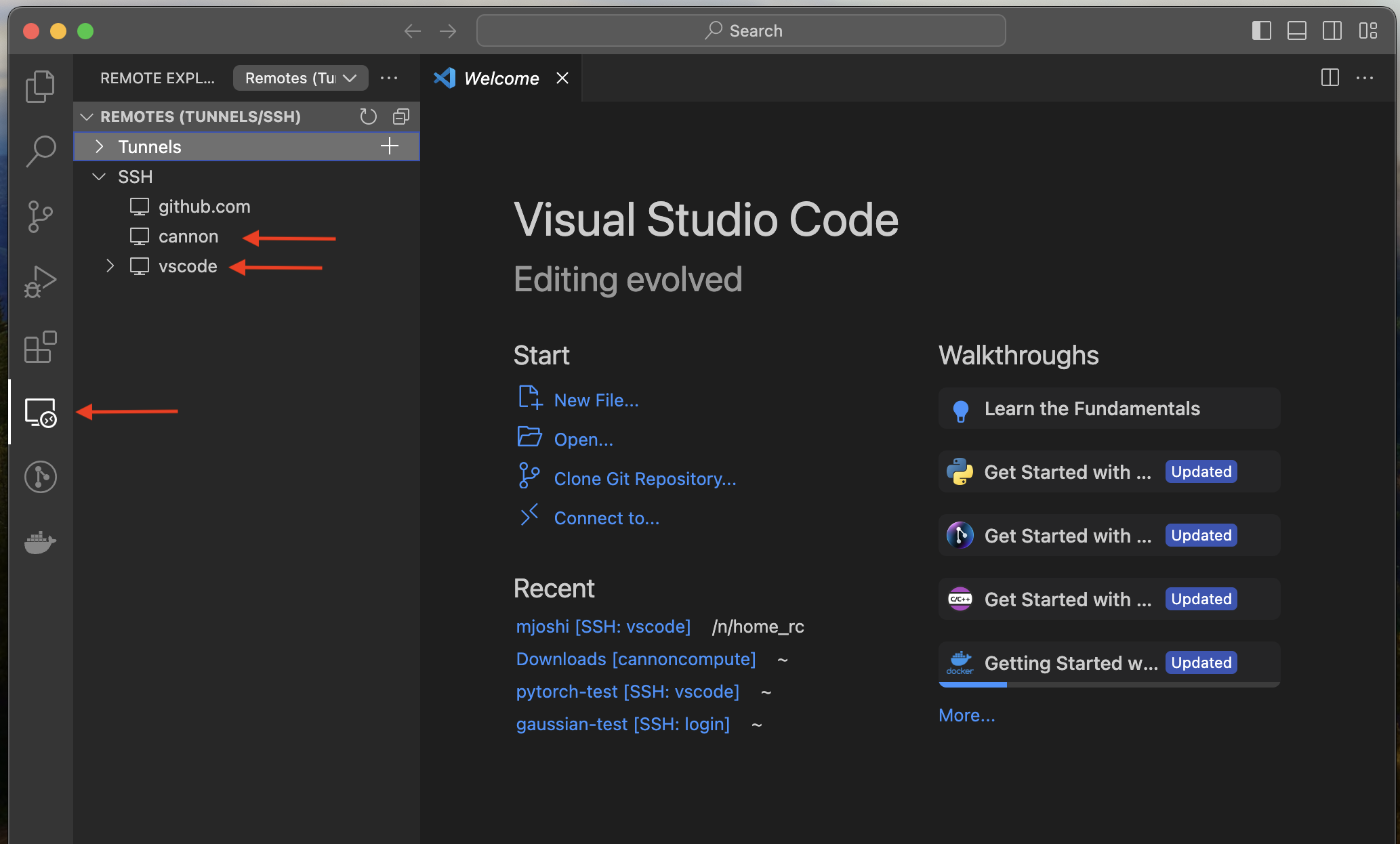

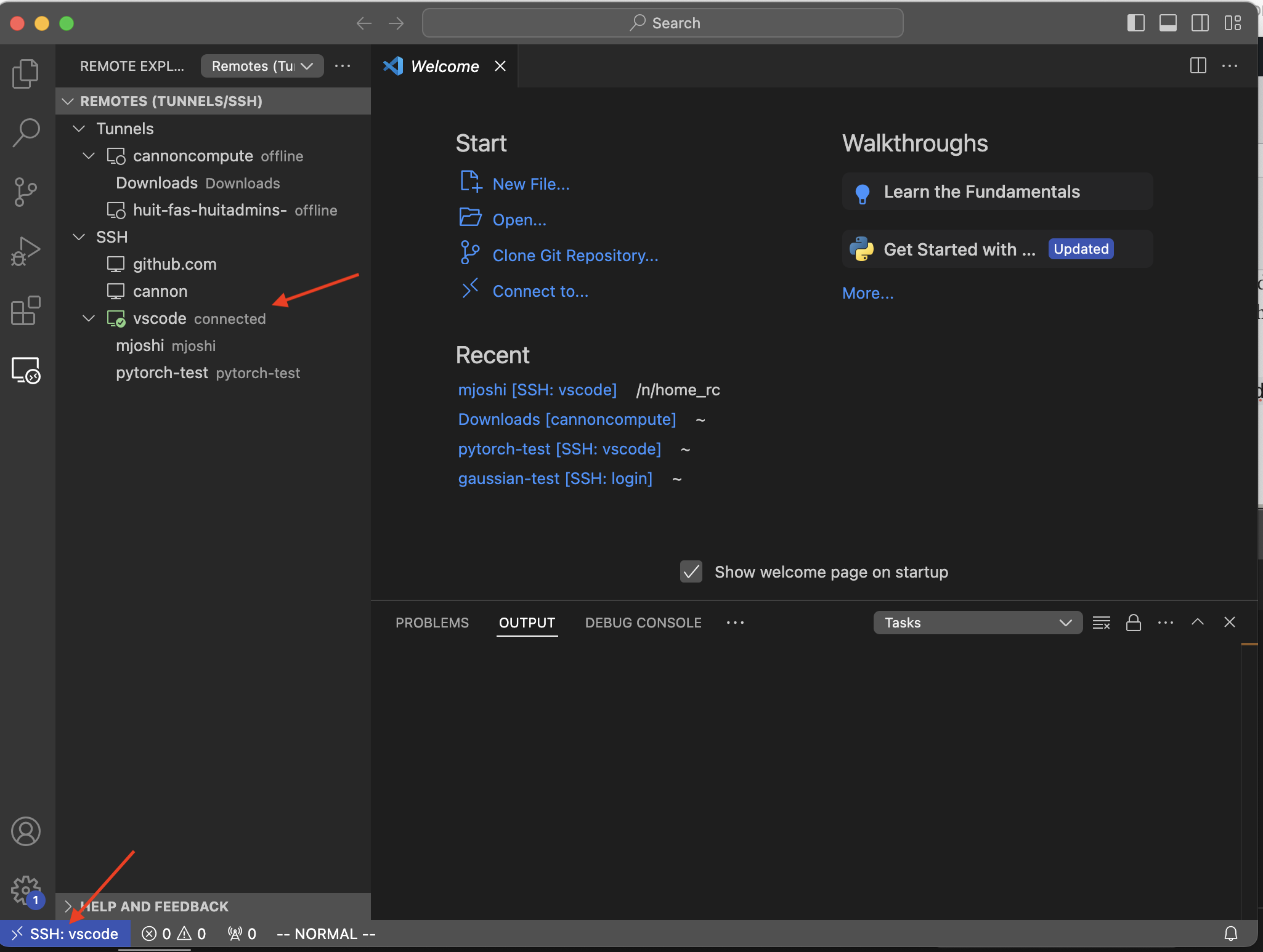

Once the necessary changes have been made to the SSH configuration file, open VS Code on your local machine and click on the Remote Explorer icon on the bottom left panel. You will see two options listed under SSH – cannon and compute (or whatever name you chose for the Host in your SSH configuration file).

Connect using VS Code

Login Node

Click on the cannon option and select whether you would like to continue in the same window (indicated by ->) or open a new one (icon next to ->). Once selected, enter your 2FA credentials on the VS Code’s search bar when prompted. For the login node, a successful connection would look like the following.

SSH:cannon.

Compute Node

In order to establish a successful connection to Cannon’s compute node, we need to be mindful that VS Code requires two connections to open a remote window (see the section “Connecting to systems that dynamically assign machines per connection” in VS Code’s Remote Development Tips and Tricks). Hence, there are two ways to achieve that.

Option 1

First, open a connection to cannon in a new window on VS Code by entering your FASRC credentials and then open another connection to compute/vscode on VS Code either as a new window or continue in the current window. You will not have to enter your credentials again to get connected to the compute node since the master connection is already enabled through the cannon connection that you initiated earlier on VS Code.

Option 2

If you don’t want to open a new connection to cannon, then open a terminal on your local machine and type the following command, as mentioned in our Single Sign-on document, and enter your FASRC credentials to establish the master connection first.

ssh -CX -o ServerAliveInterval=30 -fN cannon

Then open VS Code and directly click on compute/vscode to get connected to the compute node. Once a successful connection is established, you should be able to run your notebook or any other script directly on the compute node using VS Code.

Note: If you have a stale SSH connection to cannon running in the background, it could pose potential problems. The session could be killed in the following manner.

$ ssh -O check cannon

Master running (pid=#)

$ ssh -O exit cannon

Exit request sent.

$ ssh -O check cannon

Control socket connect(<path-to-connection>): No such file or directory

VSCode For FASSE

One cannot use Remote SSH to establish connection between your local device and a FASSE compute node via VSCode as salloc is not permitted on FASSE due to security reasons. However, one can use Remote SSH to connect to FASSE’s login node using VSCode.

For establishing a connection to the compute node on FASSE, launching a Remote Tunnel using Approach I is your only option. However, prior to doing that, make sure you enable access to the internet to launch Remote Tunnel on your browser. Additionally, once the tunnel is established, remember to install extensions as needed prior to starting your work using VSCode.

Add Folders to Workspace on VSCode Explorer

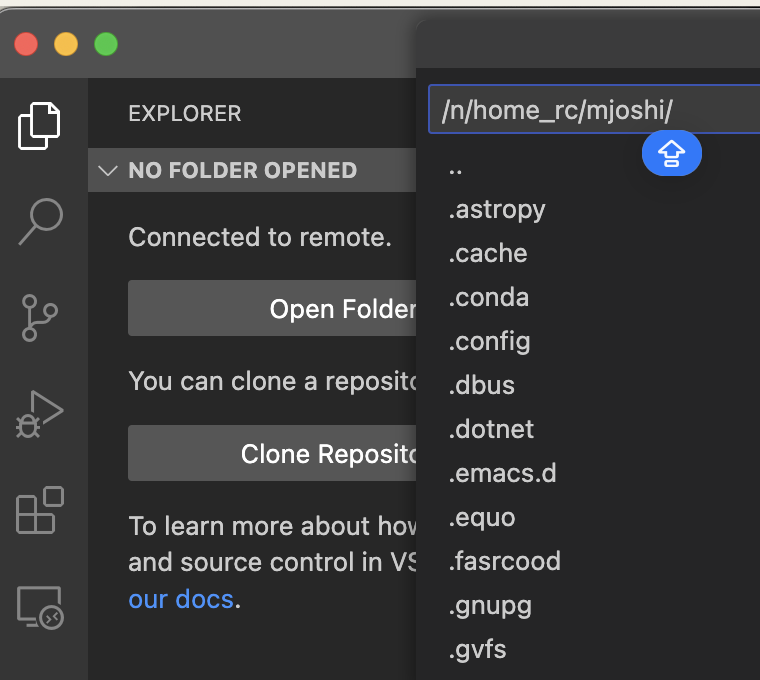

Once you are able to successfully launch a VSCode session on the cluster, using one of the approaches mentioned above, you might need to access various folders on the cluster to execute your workflow. One can do that using the Explorer feature of VSCode. However, on the VSCode remote instance, when you click on Explorer -> Open Folder, it will open $HOME, by default, as shown below.

In order to add another folder to your workspace, especially housed in locations such as netscratch, holylabs, holylfs, etc., do the following:

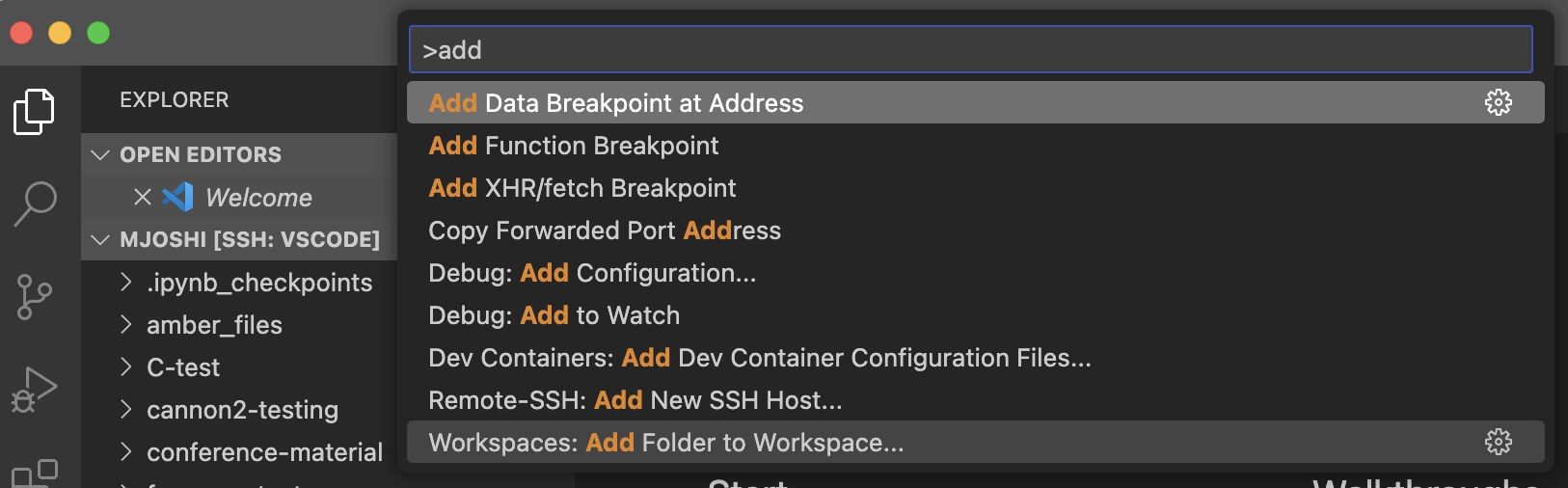

- Type:

>addon VSCodeSearch-Welcomebar and chooseWorkspaces: Add Folders to Workspace.... See below:

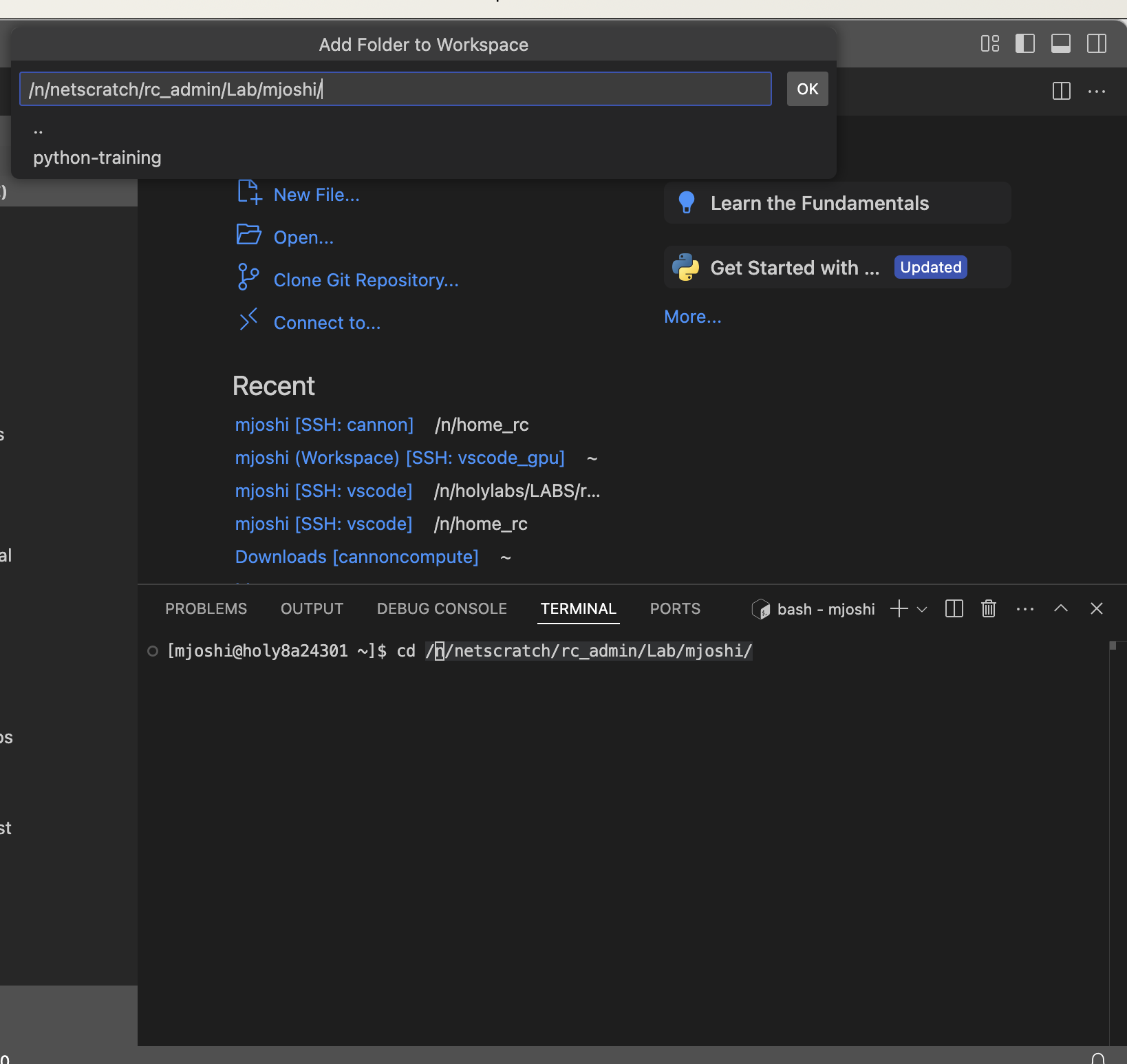

- If you would like to add your folder on

netscratchorholylabsor some such location, first open a terminal on the remote instance of VSCode and type that path. Copy the entire path and then paste it on theSearch-Welcomebar. See below:Do not start typing the path in theSearch-Welcomebar, make sure to copy-paste the full path otherwise VS Code may hang while attempting to list all the subdirectories of that location, e.g., /n/netscratch.

- Click

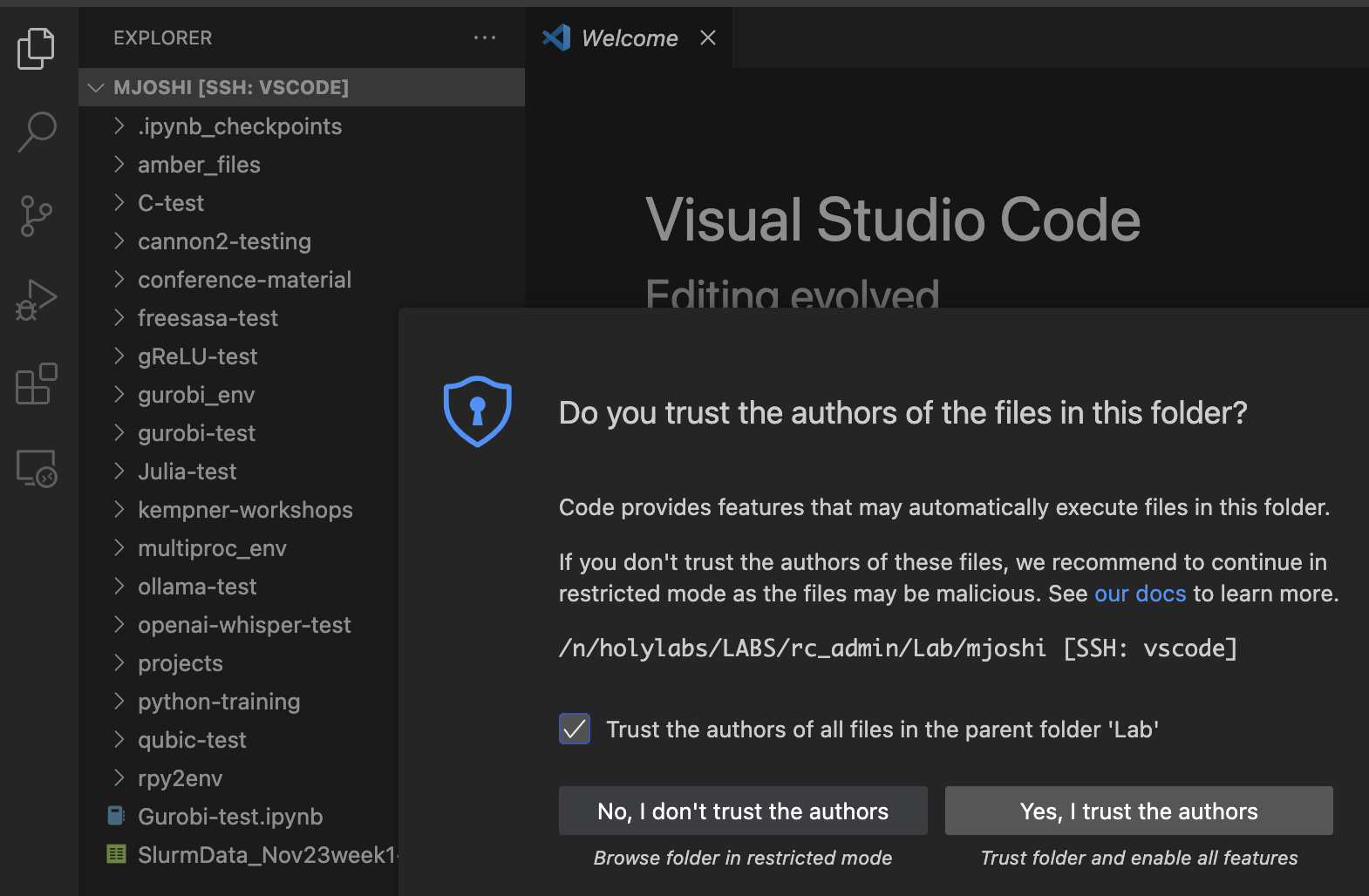

okto add that folder to your workspace - On the remote instance, you will be prompted to answer whether you

trust the authors of the files in this folderand thenReload. Go ahead and click

Go ahead and click yes(if you truly trust them), and let the session reload. - Now you will be able to see your folder listed under

ExplorerasUntitled (Workspace) - This folder would be available to you in your workspace for as long as the current session is active. For new sessions, repeat steps #1-4 to add desired folder(s) to your workspace

Best Practices

- Maximum of 5 login sessions are allowed per user at a time. Be aware of the number of VS Code instances you spawn on the cluster

- Login node session

- Use for writing &/or editing your code only

- Do not use it to run Jupyter notebook, R, Matlab, or any other script

- Compute node session

- Use for running notebooks & scripts

- Avoid using for writing &/or editing your code as this is a non-compute work

- For interactive sessions, better to be on VPN to get stable connection

- Remember to close jobs that are launched through interactive or sbatch VSCode sessions as follows:

- click on the icon next to Launchpad on VSCode GUI

- click on “Close Remote Connection”

- For Remote Tunnel session, if VS Code work is complete, in addition to above, execute the following to ensure that the Slurm job is also cancelled:

squeue -u <username>scancel <JOBID>

Troubleshooting VSCode Connection Issues

- Make sure that you are on VPN to get a stable connection.

- Match the name being used in the SSH command to what was declared under “Host” in your SSH config file for the login node.

- Make sure that the

--memflag has been used in theProxyCommandin your SSH config file and that enough memory is being allocated to your job. If you already have it, then try increasing it to see if that works for you. - Open a terminal and try connecting to a login and compute node (if on Mac) by typing:

ssh <Host>(replaceHostwith the corresponding names used for login and compute nodes). If you get connected, then your SSH configuration file is set properly. - Consider commenting out conda initialization statements in

~/.bashrcto avoid dealing with plausible issues caused due to initialization. - Delete the bin folder from

.vscode-serveror.vscode&/or removingCache, CachedData, CachedExtensionsVSIXs, Code Cache, etc. folders. You can find these on Cannon on$HOME/.vscode/data&/or$HOME/.vscode-server/data/. - Check your

$HOMEquota and remove plausible culprits, such as~/.cache. See instructions to clear disk space on Home directory full. - Make sure that there are no lingering SSO connections. See the Note at the end of Approach III – Remote SSH section.

Failed to parse remote port: Try removing.lockfiles.- Try implementing Approach I – Remote Tunnel via batch job to see if you are able to launch a Remote Tunnel as an

sbatchjob in the background to ensure that your work is not getting disrupted by any network glitches. - If you continue to have problems, consider coming to our office hours to troubleshoot this live

Dev Containers for custom software environments

VS Code supports opening a source code repository in a container. The container software environment is defined in a JSON file that adheres to the Development Containers (dev containers) specification (https://containers.dev/). The same git repository can be used to develop and run code in the same software environment on the FASRC cluster, a GitHub Codespace, or your laptop.

Using Dev Containers on the FASRC Cluster

It is recommended to (1) use VS Code in an OOD Remote Desktop session, or (2) use Approach I: Remote – Tunnel via batch job with a VS Code local client (the Visual Studio Code Dev Container extension is currently not supported in the web-browser-based VS Code (https://vscode.dev) with a remote tunnel (microsoft/vscode-remote-release issue #9059)).

Setup

- Install the Visual Studio Code Dev Containers extension

- Configure the extension to use Podman on the FASRC cluster:

Change the following settings in Code > Settings > Extensions > Dev Containers:- Dev > Containers: Docker Path – change “docker” to “podman”

- Dev > Containers: Docker Socket Path – change “/var/run/docker.sock” to “/tmp/podman-run-<uid>/podman/podman.sock”, replacing “<uid>” with your FAS RC user ID. Your FASRC user ID can be determined by running the command “id -u” on the FASRC cluster:

[jharvard@holylogin05 ~]$ id -uNOTE: if using a local VS Code (not OOD Remote Desktop), you will need to revert these changes in the future if you use dev containers via a local Docker installation

21442

Launch

See the VS Code dev container quick start for how to open a repository in a dev container.

Known Limitations

The following are known limitations using VS Code Dev Containers on the FASRC cluster with Podman:

- The docker-in-docker dev container feature is currently unsupported

- The docker-outside-of-docker dev container feature requires a workaround to use podman on the host instead of a Docker daemon:

1. In a VS Code terminal (on the FASRC cluster), issue the following command to start an API service that listens on/tmp/containers-user-$(id -u)/podman/podman.sockon the compute node:nohup podman system service -t 0 >/dev/null 2>&1 &2. In the relevant devcontainer.json file, ensure the following settings are listed (see here for a complete example devcontainer.json):

"remoteUser": "root",

"mounts": ["source=/tmp/podman-run-1234/podman/podman.sock,target=/var/run/docker-host.sock,type=bind"], // replace "1234" with your UID

"remoteEnv": {

"DOCKER_BUILDKIT": "0"

}

"workspaceFolder": "${localWorkspaceFolder}",

"workspaceMount": "source=${localWorkspaceFolder},target=${localWorkspaceFolder},type=bind"

Bookmarkable Links

- 1 Prerequisites

- 2 FASRC Recommendation

- 3 Approach I: Remote – Tunnel via batch job

- 4 Approach II: Remote – Tunnel interactive

- 5 Approach III: Remote – SSH

- 6 SSH configuration file

- 7 Connect using VS Code

- 8 VSCode For FASSE

- 9 Add Folders to Workspace on VSCode Explorer

- 10 Best Practices

- 11 Troubleshooting VSCode Connection Issues

- 12 Dev Containers for custom software environments