Search Docs by Keyword

Data Storage (Offerings, Workflow, Costs)

FAS Research Computing (FAS RC) is transitioning to a new storage infrastructure, incorporating over 70 pebibytes of new data storage. This will ensure FAS RC remains at the forefront of research, with an innovative, scalable, and reliable data storage environment that will meet the evolving needs of the Harvard community.

The transition consolidates and modernizes a significant portion of existing storage filesystems by migrating research data to new and improved hardware.

Benefits:

- Enhanced support for computationally heavy workflows including AI and Machine Learning

- Improved researcher experience with greater visualizations and storage tracking capabilities including data lifecycle management

- Streamlined and consolidated storage environments reducing the need for migrations and complex data workflows

- More resilient and reliable hardware decreasing the potential for security risks and vulnerabilities

- Built-in storage backups and encryption to prevent data loss

- Greater technological efficiency, reducing operational costs while allowing for long-term growth and scalability

Improvements:

- Scalable, cost-effective storage designed to support researcher demands and lifecycle trends

- Improved service quality with resilient infrastructure, providing reliable enterprise-grade support for a better user experience

- Reduced manual overhead on data migration efforts, reallocating staff resources to strategic initiatives

- Provides a predictable long-term cost recovery model with transparent pricing

- Supports future initiatives including AI/ML workflows, secure multi-protocol access, and ever evolving scientific workflows

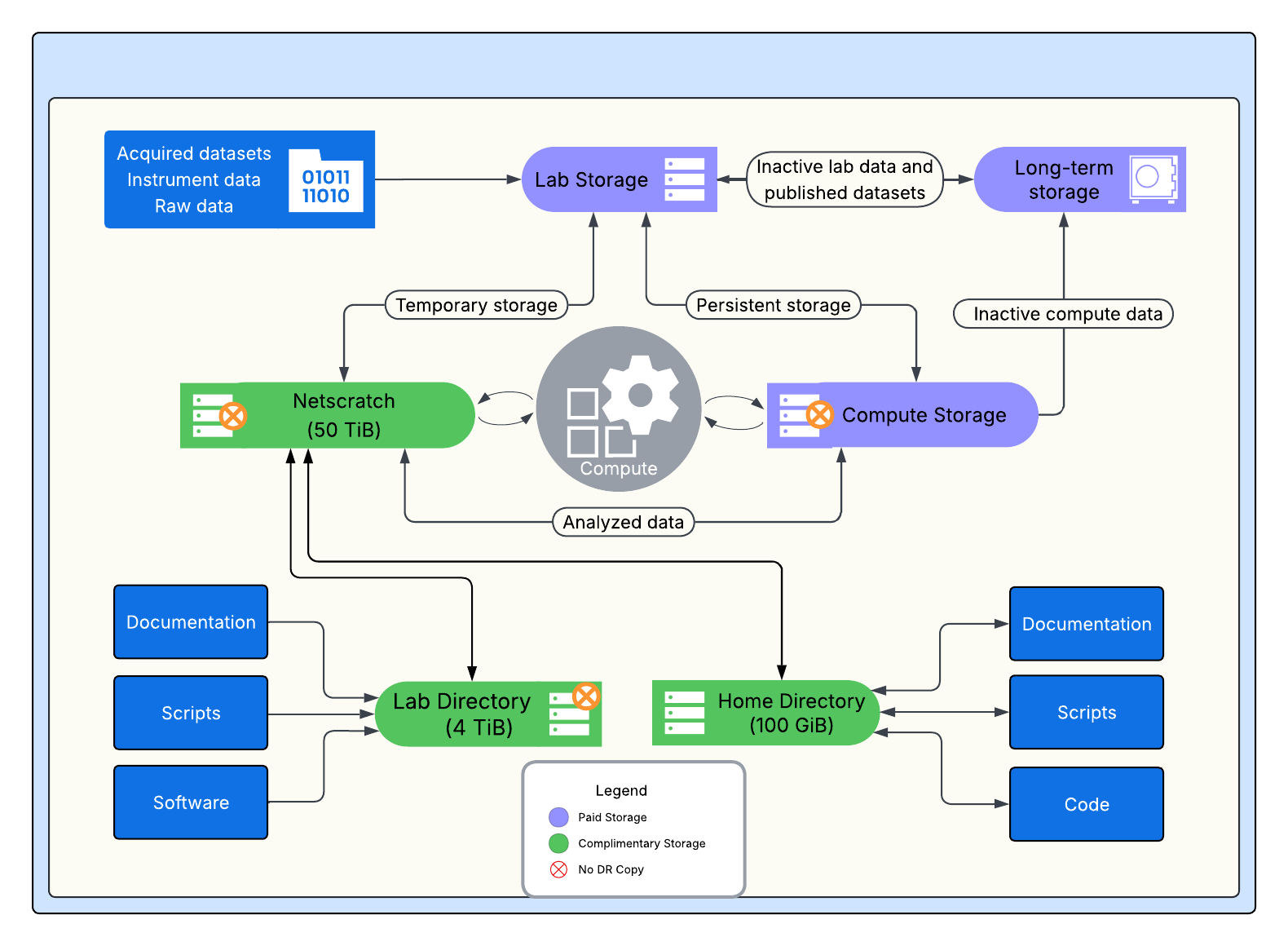

Identification of an appropriate storage location for your research data is a critical step in the research data lifecycle, as it ensures research data remains usable. We recommend you review the available storage options and select the preferred storage offering for your group’s intended workflow, keeping in mind how often the data will be consistently utilized and accessed. The offerings below are designed to store research data, rather than administrative data.

Each FASRC account is provided with a 100GiB Home Directory for individual use. Each PI or Lab Account also receives a 4TiB Lab Directory, for use by all members of the PI’s lab group and a 50TiB allotment of scratch (networked scratch). See the matrix below for more details.

*Snapshots are copies of a directory taken at a specific moment in time. They offer labs a self-service recovery option for overwritten or deleted files within the specific time period. Disaster recovery is a copy of an entire file system that can be used internally by FASRC in case of system-wide failure.

Storage Offerings (Paid)

| Compute Storage | Lab Storage | Long-term Storage | Tape (NESE) | FASSE | |

|---|---|---|---|---|---|

| Description | Active storage for data analysis; data readily utilized and accessed. Highly performant cluster adjacent storage. Optimized for AI/ML workflows. | General purpose storage for raw and project data. Not intended for heavy computational workflows. Can be used as buffer storage for lab instruments. | Long-term storage of research data to meet institutional data retention and compliance requirements. On-premise long-term storage option for Harvard affiliated labs. | Long-term storage of inactive research data after project completion or data retention purposes. Externally managed by Northeast Storage Exchange (NESE). | Secure storage environment for analysis or sensitive data, such as data generated using Data Use Agreements (DUAs) or IRB |

| Performance | High | Moderate | Low | None | Moderate |

| Size | Available upon request | Available upon request | Available upon request | 20TB increments. Ten thousand files per folder. File sizes between 1GiB to 100 GiB. | Available upon request |

| Folder Path | /n/compute_storage/pi_lab | /n/lab_storage/pi_lab | /n/long_term/pi_lab | Transfer data to Tape using Globus | /n/fasse/pi_lab_projectname_l3 |

| Retention | Weekly snapshots for 2 weeks. No disaster recovery. | Daily snapshots weekly. Weekly snapshots every 4 weeks. Includes disaster recovery. | No snapshots. Disaster recovery at additional cost. | No snapshots. No disaster recovery. | Daily snapshots weekly. Weekly snapshots every 4 weeks. Includes disaster recovery. Encryption at rest included. |

| Cost | $150/yr per TiB | $125/yr per TiB | $30/yr per TiB | $15/yr per TB | $150/yr per TiB |

| Security Level | Level 2 | Level 2 | Level 2 (Up to Level 3) | Level 2 | Up to Level 3 |

| Storage | Request storage allocation | Request storage allocation | Request storage allocation | Request storage allocation | Request storage allocation |

Requesting Storage

To request a new storage allocation, or to modify an existing storage allocation, please login to the Coldfront Storage Allocation tool. To login to Coldfront, please use your FASRC username and password. If you have difficulties with your password, you can reset it. You may also need to clear the cache on your website browser. If requesting a new storage allocation, you will need to indicate which storage offering you would like to acquire and the associated 33-digit billing code. If you do not have a FASRC Account, you will need to request one before logging into Coldfront.

PIs, General Managers, and Storage Managers are able to request new allocations, or make changes to existing allocations. PIs can email rchelp@rc.fas.harvard.edu if they would like to assign a General Manager or Storage Manager role to their lab, as this will allow the lab member to add and/or modify storage allocations.

*NOTE: All new Lab Storage allocation requests will be fulfilled beginning in mid-March. All new FASSE Storage allocation requests will be fulfilled beginning in late March. Compute Storage Allocation requests will continue to be stored on Tier 0 until the Compute Storage environment is available later this Spring. For more information about the timeline of the Storage Modernization Initiative, please visit the Data Storage website.

Storage Offerings (Complimentary*)

| Home Directory | Lab Directory | netscratch | |

|---|---|---|---|

| Description | Personal user storage. Not recommended for computational purposes. | General lab storage. Install software to be referenced from netscratch. | Temporary storage location for high performance data analysis. |

| Performance | Moderate | Moderate | High |

| Size | 100GiB (fixed) | 4TiB (fixed) | 50TiB (fixed) |

| Mount | /n/homeNN/username | /n/holylabs | /n/netscratch |

| Retention | Daily snapshots weekly. Weekly snapshots every 4 weeks. Disaster recovery. | No snapshots. No disaster recovery. | No snapshots. No disaster recovery. 90-day retention policy. |

| Cost | None | None | None |

| Security Level | Up to Level 2 | Up to Level 2 | Up to Level 2 |

| Storage | Folder generated for each user when granted cluster access. Limited to 100GiB. | Folder generated for each approved PI and their group. Limited to 4TiB. | Accessible to group members. |

*Harvard-sponsored

Data Storage Workflow

Default Directory Structure

Default Directory Structure

Two subdirectories will be present by default within the parent directory to enable easier Globus transfers and provide some initial guidance for how to organize storage.

Lab: This directory is intended as the primary working directory. It is also the directory shared out via Globus. By default, folders in this subdirectory are visible to the whole lab. Individual users may update their permissions to adjust access as they like though we highly recommend keeping access open to all lab members to allow for easier collaboration and data cleanup after you leave the university.

Everyone: This directory is visible to any one on the HPC cluster and is intended for collaboration with other labs on the cluster. Data in this directory is by default owned by the lab who hosts the data. Note that this directory is not available on Globus and is intended only for internal sharing.

While this is the default structure, labs may request additional folders be set up. Please email rchelp@rc.fas.harvard.edu if you have questions.

Directory structures on the cluster may differ depending on when they were created. Some older storage folders may have a third subdirectory called Users. We have deprecated use of this folder due to issues related to data access by the lab and PI’s, especially after users have left the university. If you are migrating data from a storage system that has a Users subdirectory we recommend moving that data into the Lab directory and making it available to the lab to view and access.

Contact:

If you have questions regarding the data storage options at FASRC, please email the Research Data Manager at rdm@rc.fas.harvard.edu.