Search Docs by Keyword

OpenMP

Introduction

OpenMP, or Open Multi-Processing, is an application programming interface (API) that supports multi-platform shared-memory multiprocessing programming in C, C++, and Fortran. It allows developers to create parallel applications that can take advantage of multiple processing cores in a straightforward and portable manner. OpenMP achieves this by incorporating compiler directives, runtime library routines, and environment variables that enable the distribution of computation across multiple threads. It simplifies the process of parallel programming by providing a set of directives that can be easily integrated into existing code, thereby facilitating the creation of efficient and scalable parallel applications. Its versatility and compatibility with various programming languages have made it a popular choice for parallel programming, particularly in scientific and technical computing environments.

Note: OpenMP is a multithreading protocol and OpenMPI is a inter-process communication protocol.

Basic OpenMP Concepts

- Parallel region: A block of code that can be executed in parallel by multiple threads.

- Thread: A separate flow of execution within a program.

- Team: A group of threads that work together to execute a parallel region.

Executing Multiple Threads

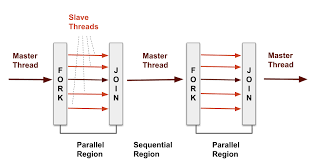

Utilizing the fork-join model of multithreading, a master thread is able to fork off several worker threads:

Common OpenMP Directives and Clauses

OpenMP directives are used to specify parallel regions and control the execution of threads. Here are some common OpenMP directives:

#pragma omp parallel: Creates a team of threads.#pragma omp for: Distributes loop iterations among threads.#pragma omp sections: Executes different blocks of code in parallel.#pragma omp single: Executes a block of code by only one thread.#pragma omp critical: Protects a block of code from simultaneous access by multiple threads.#pragma omp barrier: Synchronizes all threads in a team.#pragma omp atomic: Performs atomic updates on a variable.#pragma omp reduction: Combines results from multiple threads into a single value.#pragma omp master: Executes a block of code by the master thread only.num_threads(int): Specifies the number of threads to use.shared(list): Declares variables to be shared among threads.private(list): Declares variables to be private to each thread.firstprivate(list): Initializes private variables with the value of the original variable.lastprivate(list): Copies the value of the private variable from the last iteration to the original variable.schedule(type, chunk): Specifies how loop iterations are scheduled (e.g.,static,dynamic,guided,auto,runtime).

Advanced OpenMP Concepts

- Nested parallelism: Using OpenMP directives within a parallel region to create nested parallelism.

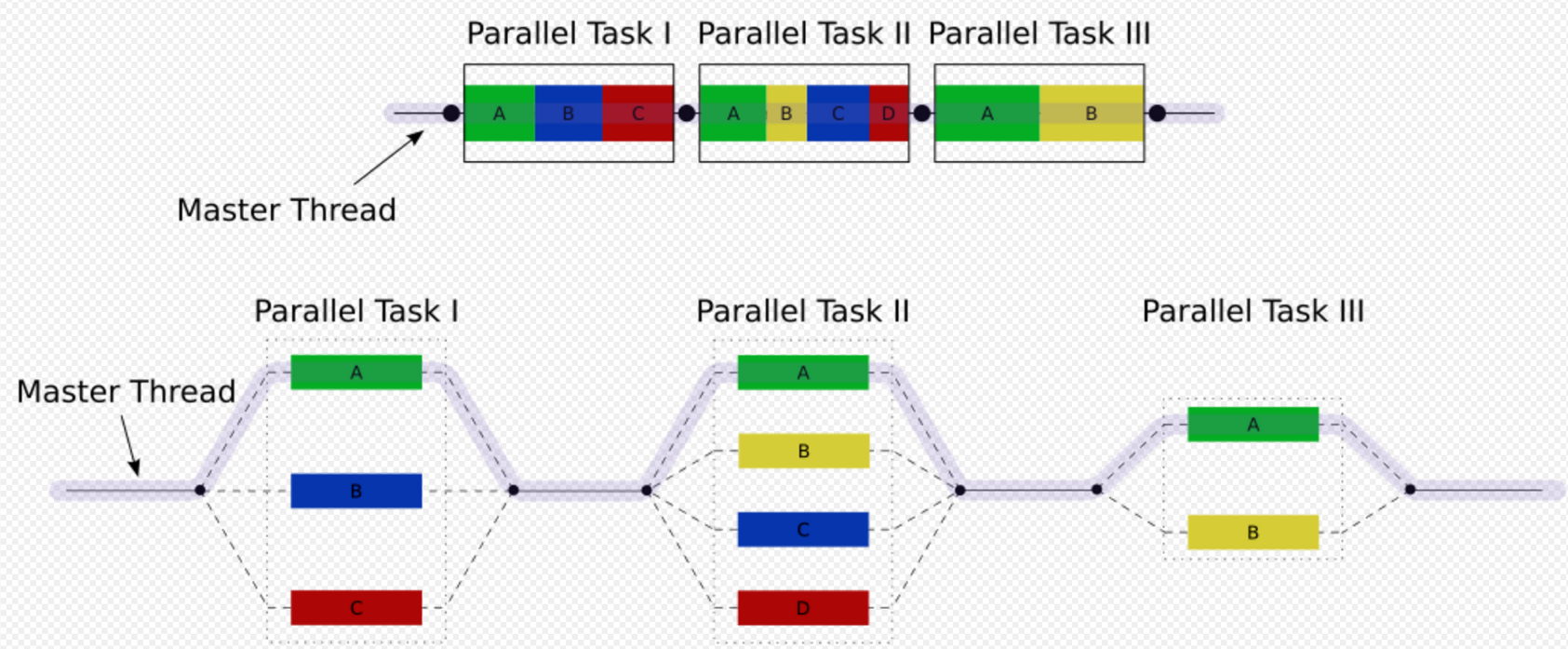

- Task parallelism: Using OpenMP tasks to execute independent blocks of code in parallel.

- Data parallelism: Using OpenMP to parallelize loops that operate on large datasets.

Best Practices

- Use the

-fopenmpflag: Always compile code with the-fopenmpflag to enable OpenMP support. - Use

omp_get_num_threads(): Use theomp_get_num_threads()function to determine the number of threads available on the system. - Use

omp_set_num_threads(): Use theomp_set_num_threads()function to set the number of threads used in a parallel region

Examples

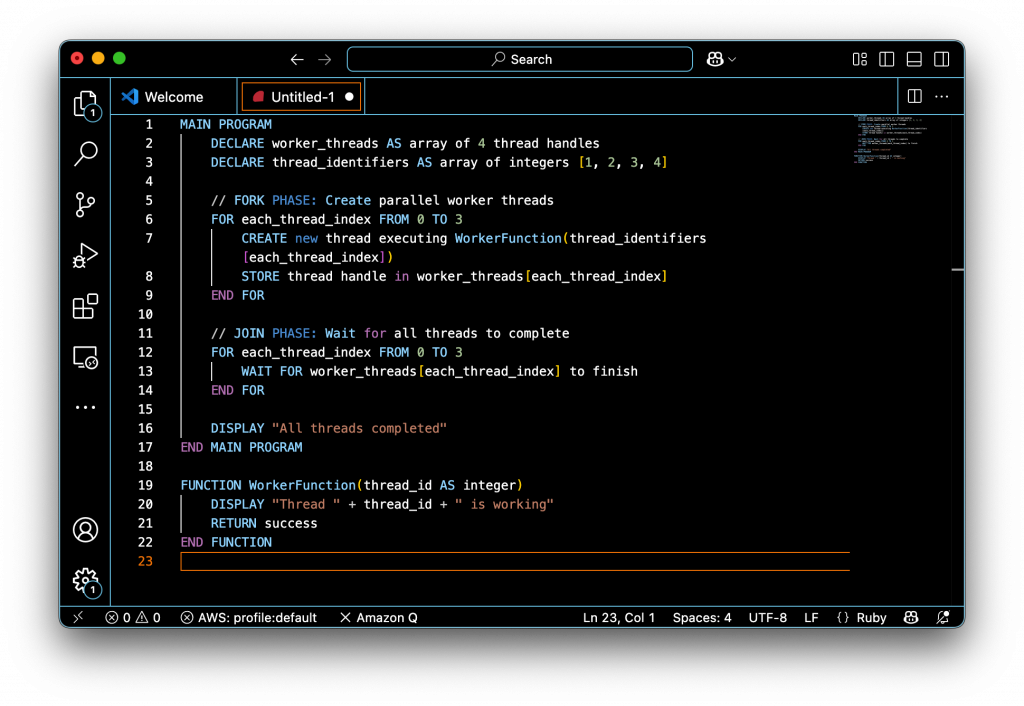

Basic Pseudocode Example

Is Upgrading Existing Code This Easy?

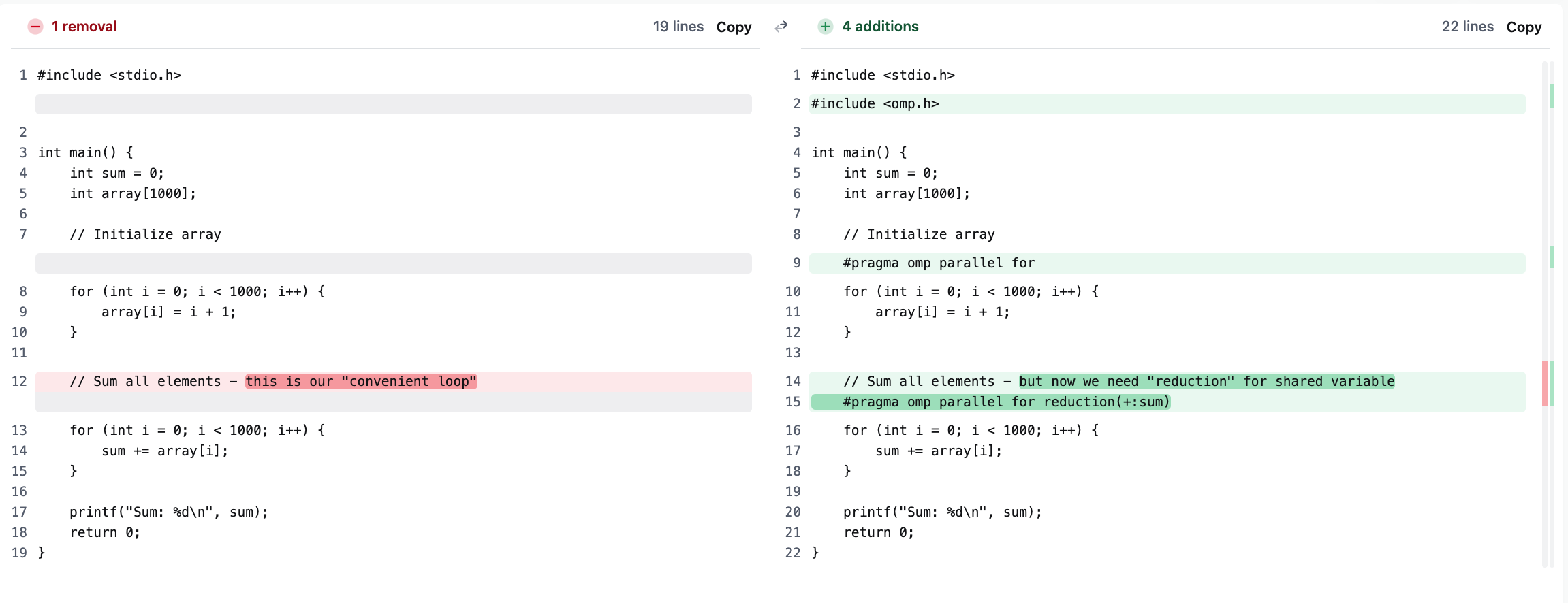

In some cases, upgrading existing code is easy, as with this C code:

But don’t get too excited! Poorly implemented OpenMPI upgrades easily lead to race conditions.

Examples in User Codes and our Training Video

These examples are used in the Parallel Job Workflows training session:

- Example1: OpenMP parallel region in C

- Example2: OpenMP diagonalization of a symmetric matrix in Fortran

- Example3: OpenMP scaling illustration – Parallel Monte Carlo calculation of Pi in C